Loading...

Excessive use of social media has been shown to significantly contribute to mental health issues such as anxiety and depression. Research suggests that everyday interaction with social media platforms can negatively affect emotions, particularly when individuals start comparing themselves to others. This self-judgment is often skewed due to the fake life portrait on these platforms, where individuals only share the most perfect versions of themselves. As a result, viewers may feel a lack of confidence or anxiety about their own lives, which increases feelings of depression [8].

The act of scrolling continuously through social media feeds, especially for long periods, is now a typical behavior that can lead to a range of psychological issues. Studies have demonstrated that excessive social media use leads to heightened emotional responses, such as stress and sadness, and decreases overall life satisfaction [13]. This phenomenon is also known as "social media addiction," where individuals are trapped in checking their feeds frequently, leading to less emotional connection with the real world and poor emotional regulation.

The addiction to social media and smartphones can further aggravate these emotional disturbances. Research indicates that individuals who spend more time on these platforms mostly experience emotional control disorders such as irritability, restlessness, and a diminished ability to manage stress effectively [1]. Over time, these emotional disruptions may contribute to deeper mental health issues, including depression and anxiety.

Moreover, excessive social media use is also linked to decreased productivity. As individuals spend more time scrolling and engaging with content, they unintentionally waste hours that could otherwise be spent on more productive activities. This constant engagement leads to procrastination, as users become absorbed online, leaving little time for tasks that require focus and effort [13,14]. As productivity declines, so does self-confidence, as individuals may feel unaccomplished or inadequate due to a lack of real-world achievements.

Particularly concerning is the impact of social media on the younger generation, especially those between the ages of 13 and 25. This age group is particularly vulnerable, as they are at a stage where identity formation is crucial. The constant exposure to curated, often unrealistic portrayals of life can have a profound effect on their self-esteem and mental health. According to a study by the Pew Research Center, 93% of teenagers report using social media platforms regularly, and many experiences adverse effects on their mental well-being [12].

With the rise of social media usage among younger individuals, critically examining the time spent on these platforms is essential. Establishing boundaries around social media use and focusing on digital detoxes could mitigate the adverse effects. Encouraging mindful engagement with technology and promoting face-to-face social interactions may help improve mental health outcomes and reduce feelings of anxiety and depression related to social media use.

Distinguishing content on social media has become increasingly important due to the rise of misinformation, fake news, and manipulated media. With billions of users worldwide, social media platforms have become many people's primary source of information and communication. However, this also means that content shared on these platforms can easily be misleading or false, which can have significant implications for public opinion, political processes, and individual well-being. To address this, social media CEOs or platform owners are critical in ensuring that the content shared on their platforms is authentic, reliable, and aligned with ethical standards.

One of the key solutions social media platforms can implement is robust content verification systems. These systems would employ automated algorithms, human moderators, and crowdsourcing to verify the authenticity of content before it is widely disseminated. By cross-referencing information with trusted sources and fact-checking organizations, platforms can ensure that misleading or false content is flagged before it goes viral [11].

Social media platforms like Facebook and Twitter have already started experimenting with similar systems, such as flagging posts that are identified as false or misleading based on fact-checking reviews. For instance, Facebook uses fact-checking partners like PolitiFact and the Associated Press to evaluate the accuracy of posts. At the same time, Twitter labels tweets that contain misleading information or violations of its policies [17]. These initiatives are steps toward creating a more responsible and accountable platform but must be continuously refined to address emerging challenges.

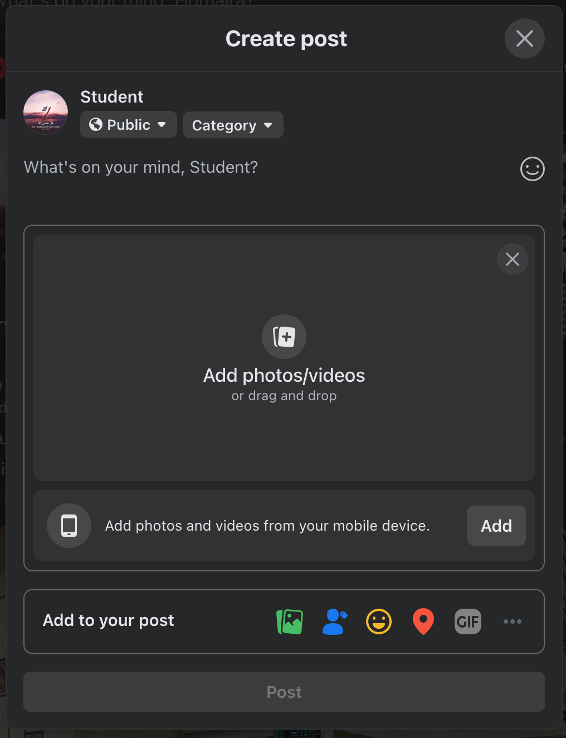

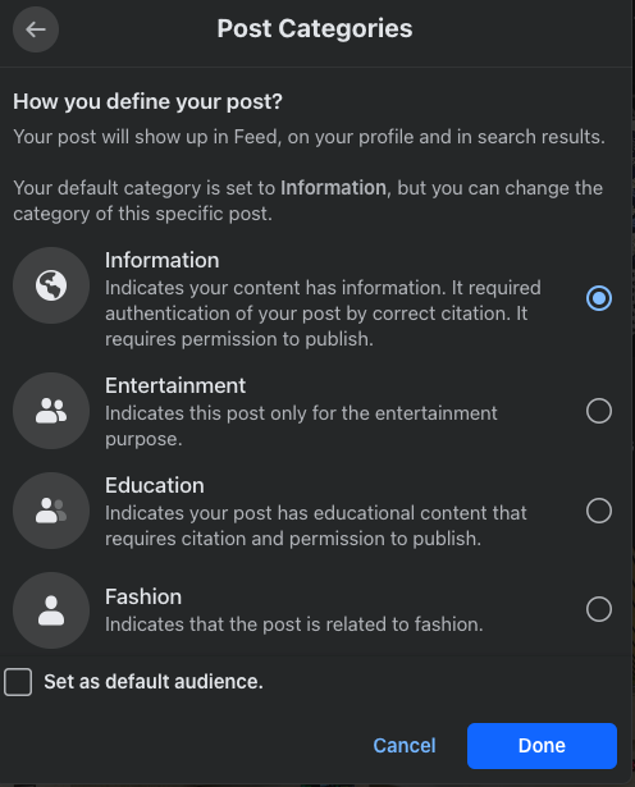

Before users can upload any content—whether audio, video, or text—they should choose a relevant category that best represents the nature of their content. This categorization process helps organize and classify the content, making it easier for the platform to manage, categorize, and provide the right audience. Categories might include options like Information, Entertainment, Education, Fashion, Technology, News, and others, depending on the platform's focus.

Users ensure their content is appropriately tagged and classified by selecting a category, allowing for more accurate recommendations, searches, and content curation. It also helps the platform implement better moderation strategies, ensuring content is reviewed according to type. For example, educational videos may be evaluated differently from entertainment content regarding accuracy and quality standards. Users can also view a tag on the video to verify its content category.

Additionally, this categorization can streamline the content discovery process for viewers. When users browse through the platform, they can filter content by specific categories, enabling them to find the type of content they are most interested in. This approach also promotes more targeted audience engagement and ensures that content is presented in a structured, easy-to-navigate manner.

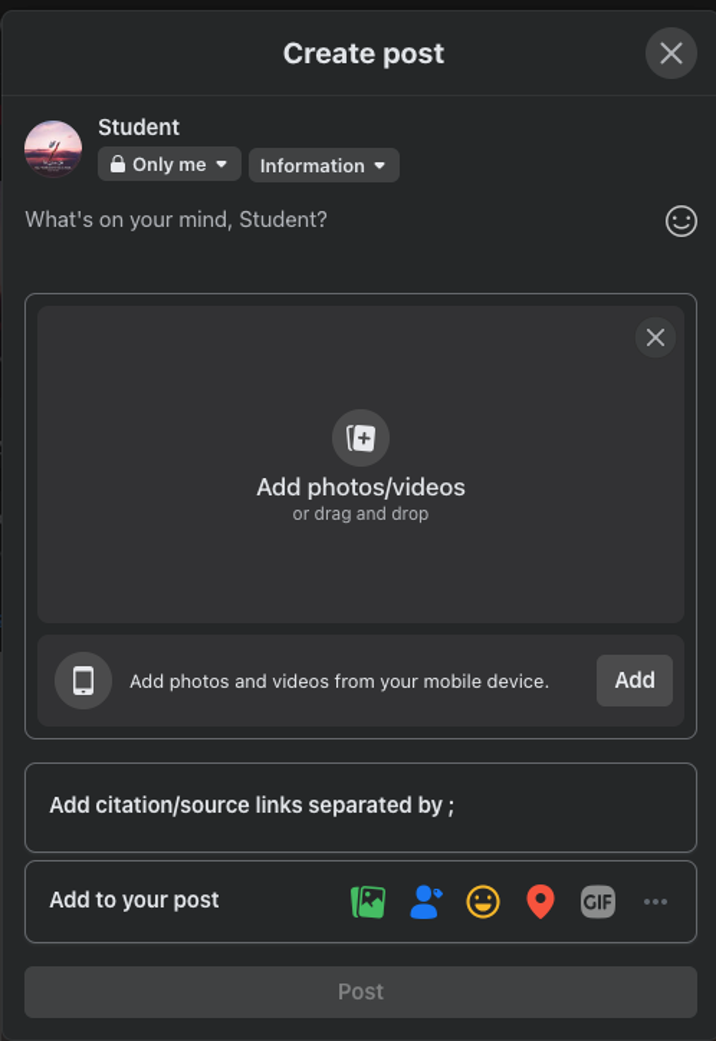

When users select the Information category for uploading their content—whether audio, video, or text—they should be required to provide relevant citation/source links to authenticate the content. This step is essential for ensuring that the shared information is reliable, accurate, and verifiable.

Here is how the process would work:

By selecting "Information," the user is indicating that the content they are uploading is meant to provide factual, informative, or educational content, which may include news reports, research findings, data analysis, tutorials, or any other form of content that aims to present factual information.

The user must provide citations or source links to maintain the content's credibility and ensure it is based on verified data or reliable sources. These links could be references to reputable websites, academic papers, books, or other authoritative sources that back up the information presented in the content. For example, if the user is uploading a video discussing climate change, they might be asked to provide links to scientific journals or government reports as their sources.

The purpose of asking for citations is to allow the platform to authenticate the content. Once the citation/source links are provided, the platform can use algorithms or human reviewers to verify whether the information in the content matches what is stated in the provided sources. The links could point to articles, research papers, or other verifiable content the platform can reference to validate the uploaded content.

Requiring citation links is a way to ensure that the content adheres to factual accuracy and is not misleading or false. For example, when presenting data or claims, the user must support them with authoritative references. This step helps to reduce the spread of misinformation and promotes sharing well-researched, accurate content on the platform.

The citation requirement establishes a standard of trust and accountability. Users know that the platform will hold them accountable for the authenticity of their content, and it signals to viewers that credible sources back the content. This builds trust in the platform and encourages users to share well-researched and fact-checked information, contributing to a more reliable and informed online community.

Besides improving the quality of the content, this step also serves as an educational tool for users, encouraging them to engage with high-quality, authoritative resources. It also highlights the importance of citing sources and verifying information before sharing it with others, which can help users become more responsible and critical information consumers. Users can also see the tag in the video to verify the content category.

The proposed algorithm follows a structured approach to verify the authenticity and accuracy of video content. Here is a more detailed breakdown of how the process works:

The first step involves extracting the spoken or visual content from the video and converting it into a written text script. This can be achieved through speech-to-text transcription technology, which listens to the audio in the video and converts the dialogue, narration, and any other spoken elements into a written form. This script becomes the core representation of the video’s content, capturing all the verbal information conveyed.

Once the entire script of the video is generated, the next task is to summarize it. The algorithm analyzes the text script and extracts the main points, key ideas, and essential details. This summary distills the video content into a more concise form, providing a quick overview of the video's main messages or themes. Summarization algorithms can use various techniques, such as natural language processing (NLP), to identify and condense the most important content.

If the video references external sources, studies, articles, or other citation links, the algorithm navigates to those links to gather the relevant information. The algorithm will generate a summary for each source, much like it did with the video’s script. This ensures that the external references are clearly understood concisely, making it easier to compare against the video’s content.

The algorithm compares the two after generating summaries for the video content and the source/citation links. It checks for consistency, looking for alignment in the key information, facts, and statements. The algorithm evaluates whether the details presented in the video match what is stated in the sources. This comparison could involve checking for direct matches of facts, figures, and claims or verifying if the video content accurately reflects the information provided in the sources.

The content will be approved if the video’s summary aligns with the source summaries, indicating that the information presented is accurate and corresponds with the external sources. This approval signals that the video is credible and trustworthy. On the other hand, if there are significant discrepancies between the summaries—such as factual errors, misleading claims, or mismatches in key points—the algorithm will deny the content. Denial serves as a safeguard to ensure that only accurate and reliable information is accepted.

This process enables a more automated and systematic way of verifying the authenticity of video content, primarily when it relies on external sources for validation. By converting video content and sources into summarized forms, the algorithm can efficiently assess the accuracy of information, ensuring that the content meets high standards of truthfulness and reliability.

Authenticating text content becomes a more straightforward process when we use summarization. The first step is to generate a summary of the content in question, whether it is an article, blog post, or other written material. This summary captures the key points and essential details of the content.

Next, we compare this summary with those derived from the source or citation links the content references. These sources can include original articles, research papers, or any other authoritative material the content is based on. By comparing both summaries, we can assess whether the information aligns and is accurate. If the summary of the content matches the summary from the source, it strongly indicates that the content is authentic and reliable. On the other hand, discrepancies between the two summaries may signal potential issues with accuracy or authenticity, prompting further investigation or denial of the content. This approach simplifies the process of verifying whether the information in the text is trustworthy, relying on the power of summarization and comparison.

Ultimately, the responsibility of ensuring that content shared on social media platforms is authentic, accurate, and non-harmful lies with the platform owners and CEOs. This can be achieved by implementing content verification systems, educating users, utilizing AI-powered tools, enforcing stricter moderation policies, and maintaining transparency in operations. By adopting these solutions, social media platforms can play a crucial role in reducing misinformation and creating a healthier online environment.

To sum up, by requiring users to provide citations or source links when uploading content under the "Information or Education" category, platforms ensure the reliability and verifiability of the shared content. This enhances the platform’s overall quality and fosters a more informed and trustworthy user community. In essence, categorizing content before upload adds significant value by improving user experience, enhancing content quality control, and ensuring that content reaches the appropriate audience. Verifying the content category ensures that incorrect information is not spread to others.